From the Desk of Professor No One

It’s no exaggeration that generative artificial intelligence (GenAI) may be one of the most revolutionary and quickly-evolving technologies of the modern world. And it’s getting smarter every day. When ChatGPT was first released to the public in November 2022, it would give false facts, misunderstand queries, and (in a viral example turned industry joke) couldn’t identify the number of R’s in the word strawberry.

Since then, many more companies have released their own models and continually update them. As of March 2025, ChatGPT boasts their latest o-models, omni-functional models (capable of processing text, video, and audio) with higher levels of ‘reasoning’.

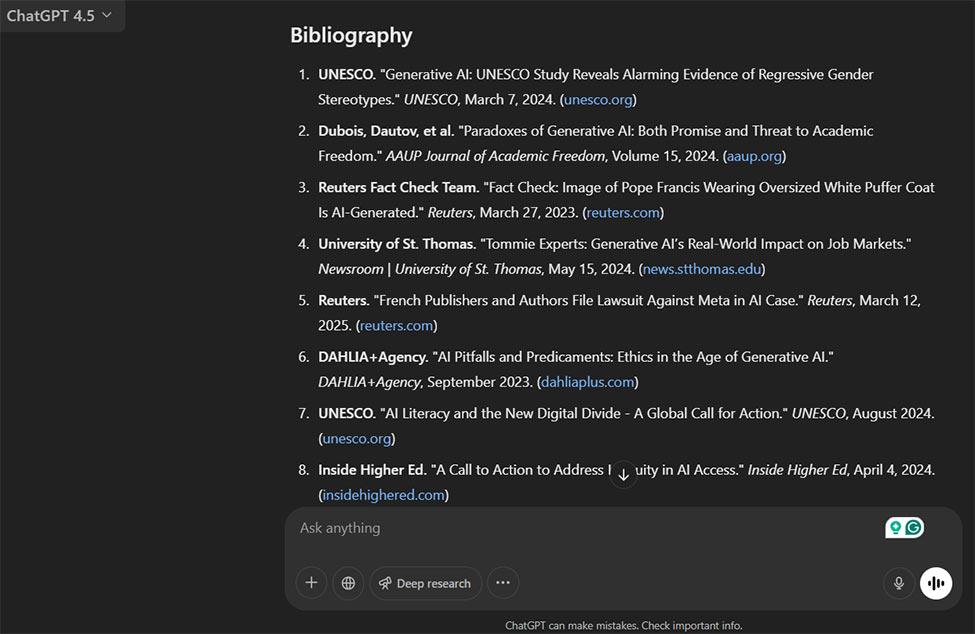

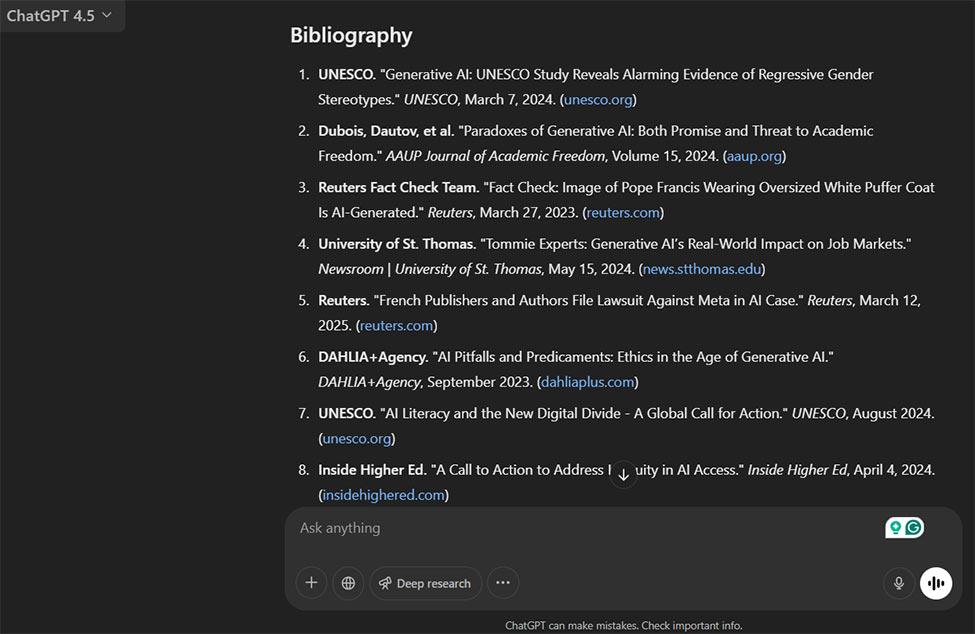

Another new feature is “Deep Research," which unlike prior models that would respond rather simply to requests—conducts thorough web searches of peer-reviewed and industry reports to create high-quality research papers with accurate citations and often novel conclusions.

What Does it Mean

Still, many people—especially those of us in academia—may be more cautious. The possible advantages seem clear. If we can speed up research, what breakthroughs might come from healthcare, tech, law, the humanities?

The concerns, however, remain nebulous and ever-changing. In a world where AI can research a topic in ten minutes, what is the value of assigning essays to students? Will we ever be able to clearly discern between real images and deepfakes? Will AI remove challenge social norms or reaffirm bias?

These are difficult questions.

So, we asked the expert.

Hey, ChatGPT—Are You Evil?

To answer the question “what exactly should we be concerned about with generative AI”, we asked ChatGPT. Specifically, Deep Research.

The prompt: I'd like a critical academic paper that discusses the drawbacks and pitfalls of generative AI. What are the biggest concerns? Can AI challenge social norms, or does it reinforce existing biases? What role do corporations have in balancing ethical decisions and the need to use these tools? What role do universities play in ensuring fair AI literacy and access for students of all backgrounds?

Based on 34 sources, ChatGPT delivered, as it describes, a 15-page “deep analysis in Chicago style discussing the drawbacks and pitfalls of generative AI across various applications and disciplines.”

Explore the live prompt and results.

Watching a Machine Think

The following video (slightly edited and significantly sped up for time) shows the process of a Deep Research query. After the user sends an initial query, ChatGPT usually asks a few questions in response, such as the length and format, preferred tone, additional areas of focus, and then it begins to think. This process can take anywhere from a few minutes up to twenty.

As it thinks, users can watch along as ChatGPT explains its thoughts. Along the sidebar (starting at 0:15) ChatGPT describes its actions, explaining not only what it’s searching, but why it chooses certain sources, considers potential avenues of thought, and reasons through its next steps.

Not only can the user comb through all of the sources listed, but each source is embedded as a link following the relevant sections within the paper.

Too Long, Didn't Read

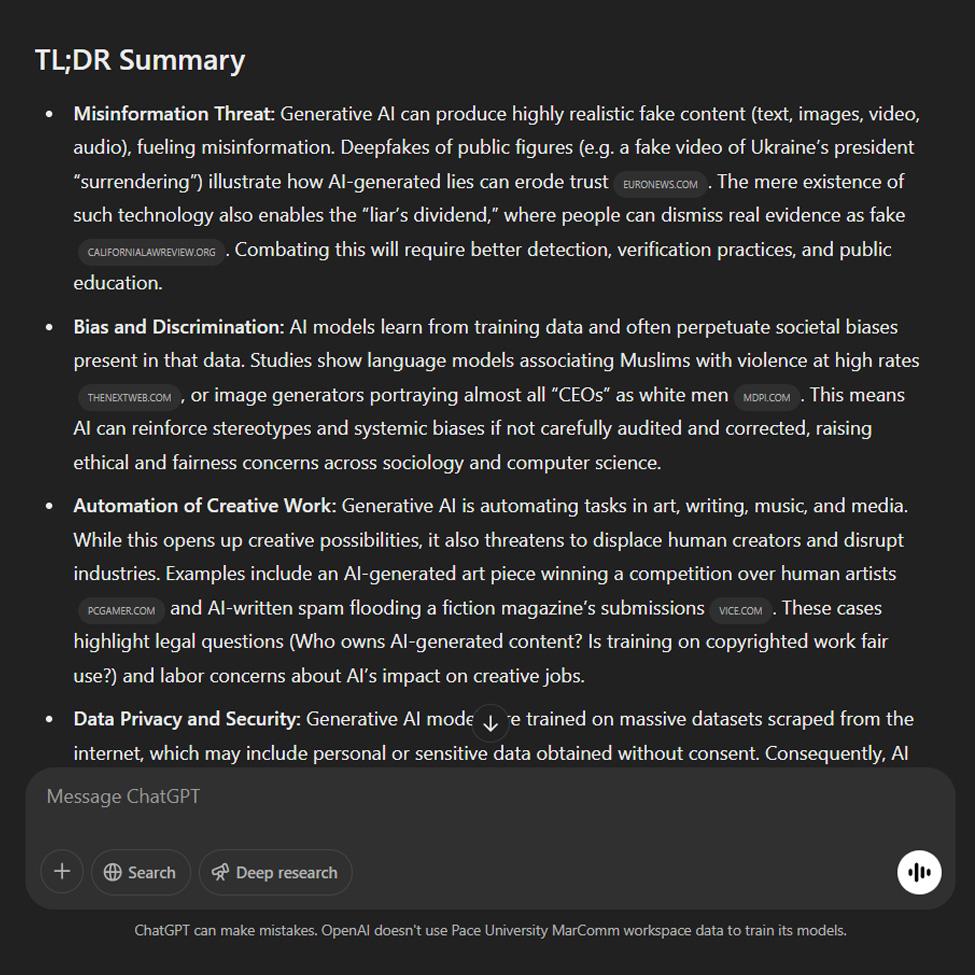

Never fear, we also asked ChatGPT to summarize its findings. It listed misinformation, bias and discrimination and automation of creative work among top concerns, and discusses the role of both corporate responsibility and higher education in ensuring a more sustainable model of ethical AI growth.

But Really, What Does it Mean?

It’s a bit dystopian to ask an AI chatbot what’s wrong with AI. (Thankfully, it didn’t say “absolutely nothing, please continue to give me your data.”) But as AI becomes more capable, it is up to humans how we use it. How we regulate it. How much we trust it. Many of the concerns quickly become existential. In a world where AI is becoming smarter, what does that mean for us? As AI seems to become more human, will humans somehow become less?

When considering how we should use AI, or what place humans have in an AI world, perhaps the wisdom of perhaps the most famous AI chatbot Hal 9000 can serve as some guidance: ‘I’m putting myself to the fullest possible use, which is all I think that any conscious entity can ever hope to do.’

More from Pace

Pace President Marvin Krislov recently participated in a conversation at Google Public Sector GenAI Live & Labs as part of the Future U. podcast. He joined higher ed leader Ann Kirschner, PhD, and Chris Hein, Field CTO at Google Public Sector, to discuss the evolving role of AI in higher education.

From privacy risks to environmental costs, the rise of generative AI presents new ethical challenges. This guide developed by the Pace Library explores some of these key issues and offers practical tips to address these concerns while embracing AI innovation.

Generative AI is reshaping how we create, communicate, and engage with the world—but what do we gain, and what do we risk losing? This thought-provoking guide challenges you to move beyond fear or hype, applying critical thinking to AI’s evolving role in media, creativity, ethics, and society.